If intelligence and consciousness can indeed be reduced to a series of mathematical models then carbon-based human beings are a much better deployment vehicle than computers, their silica-based counterparts. Carbon-based systems have actually been perfected over millions of years through slow-but-steady Darwinian evolutionary approach, while their silica-based counterparts have evolved over the last ~70 years by human beings themselves. Who will excel whom, and at what point of time, is the debate which has been raging for the past several decades but never before it had been so cued towards Artificial Intelligence (AI).

So then what is AI?

Descriptive, Predictive, Prescriptive and Autonomous AI

One way to think about AI is in terms of Descriptive, Predictive, and Prescriptive analytics, with the next step leading to Autonomous AI. Descriptive explains the data through visualization and basic statistics, predictive help one predict future events, while prescriptive prescribes an action to a human as a response to a future event. Autonomous takes it further, where the AI system not only prescribes but is also trusted to take those actions without human involvement.

Narrow AI, General AI, Conscious AI

Another popular way to think about AI is in terms of Narrow AI, General AI (Artificial General Intelligence or AGI), and Conscious AI (or Super AI). All the progress we have seen is in the field of Narrow AI where the AI system becomes very good at just one specialized thing. For example, Deep Mind’s AlphaGo could beat the world champion of Go while it cannot play much simpler games like Crosses and Naughts. Similarly, an algorithm that has learned to identify a Labrador cannot identify a Dalmatian. They are all very good at one specific task, even better than humans, but quickly become helpless the moment required to do anything off-script. “Being good in just one thing” is best suited for usecases in business pertaining to “automation at scale” where we expect machines to do only one task but do it well and do it at scale. Businesses can simply put many such “Narrow AI” machines, each with the specific capability and automate part of a business process. Almost all of today’s business applications of AI lies in this field of “Narrow AI”. Conversational agents like Alexa have many Narrow AI skills joined up together which they call “skills” in the Amazon parlance. When we speak to a human voice, we expect a human-like intelligence while in fact, these conversational agents are just a collection of Narrow AI’s stitched together behind a human voice.

“General AI” or Artificial General Intelligence (AGI) implies a general intelligence in a machine, just like a human being has. Technically AGI is akin to having a single entity (a large piece of code) that is able to learn and perform any intellectual task, we have many problems to solve before that will be possible. We first need to understand what is intelligence and how to measure it, which still we cannot. Transfer learning is another challenge where we have had limited success. For example, we can build a ‘language model’ by deep learning from a large document source, and with some fine-tuning, we can use it for classification and sentiment analysis. But human beings play at a different level, they can transfer insights gathered from one situation and apply them to another similar situation with ease. My nine-month-old son has recently learned that if he makes certain kinds of hand and facial gestures, then the ecosystem around him suddenly becomes much more conducive to how he wants it to be. He learned it from one situation but has quickly transferred his learning to almost every other situation he encounters. One-shot learning is another unsolved problem wherein just one experience is enough to modify the behavior of an AI. Zero-shot learning is when a machine is able to master a task it has not seen before. Transfer learning is an important ingredient for any implementation of one-shot or zero-shot learnings. Another unsolved area in AGI is imagination-based learning. Human beings have a habit of coming up with new insights while just playing or replaying a certain situation in their heads, AGI is far from accomplishing it.

The last one, Conscious AI, is similar to AGI but goes one step further. It also deals with the question of when an intelligent being can be called conscious. Are all consciousness the same, e.g., is the consciousness of a dog or a spider the same? If they are different, then we are essentially saying that more intelligent beings have higher consciousness. If that’s the case, then can we extend the same arguments to within human beings? Suppose we perfect, accurate way of measuring human intelligence, would we then say that the consciousness of human beings is proportional to their intelligence? If we are conferring rights on the basis of the level of consciousness, which in turn has some degree of proportionality with intelligence, then what right do we give to those machines which are way more intelligent than us?

AI and it’s connection to Liberal Art Subject

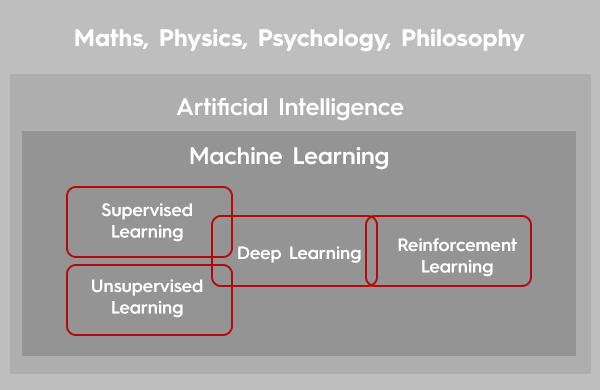

This is better depicted diagrammatically, the below diagram encapsulates the scope. It explains relationships between the scope of AI, various types of machine learning with liberal art subjects which AI is related to.

AI is not new, but this time it is different

The past 50-60 years has seen several waves of AI hypes. In 1970, Marvin Minsky, the co-founder of AI labs at MIT, predicted that in 3 to 8 years a machine would have the general intelligence of a human being. It didn’t turn out to be the case. In the 1980s there was a hype around expert systems, but this idea of solving problems by creating rules from human experts and encoding them in computer systems didn’t turn out the way it was envisaged. AI funding dried up, leading to the “AI winter” (or more accurately, Deep Learning winter) which lasted till the late 2000s. The biggest AI wave started 15 years ago, facilitated by the availability of big data to learn from, the popularity of cloud computing, which in turn made GPU’s accessible to process the big data and the availability of enough computation power on mobile phones to run AI models. Unlike the previous waves, this time AI has brought about business value: think shopping recommendations, autonomous cars & drones, medical diagnosis, image recognition, autonomous weapon systems, automated fraud detection, credit risk prediction, conversational agents, etc. With continued investments, it is fair to think that we should see the continued evolution of mathematics and technology behind AI.

To conclude

A large part of mathematics deployed in AI models currently is about finding the function (f) which transforms given inputs to desired outputs (i.e. given a picture, is it curable cancer or given a student, would he drop out of college?); or about treating an environment as Bayesian probability distribution and finding the best way to modify it (i.e. a trading software buying a large number of shares without affecting the price). This works well for usecases where the problem space is closed, which is where all the successfully deployed AI systems operate. For open-ended usecases, which is where most research in unsupervised learning is directed towards, we may require an entirely different approach. With quantum computing on the horizon, there could be a big leap in terms of what’s achievable. Training a sophisticated deep learning algorithm currently takes hundreds of GPU running for weeks. With the help of quantum-enhanced machine learning and reinforcement learning, if it becomes possible to rapidly train and create a deep learning model, then we are staring at a very “non-linear” future of AI, even with the currently deployed mathematics.

Now that we have a high-level appreciation of AI, I will next cover my views on the mathematical/tech landscape currently in use in AI, usecases associated with them, and the journey ahead.

Satish Srivastava

Associate Director- Solutioning and Propositions